Student Data is the New Oil

Does the recent repackaging of University of Michigan public datasets suggest that research data should be closed by default?

One of the reasons LLMs are so wildly effective at predicting human language is that they’ve been fed a lot of human language. LLMs work through prediction based on their dataset, and when their dataset contains written text from webpages, online comments, and even transcripts from YouTube videos (which Whisper as text-to-speech AI enables), then the result is pretty good.

Tech companies are pretty good at hiding where their data comes from, but we do know the source in a few cases. Meta’s LLAMA appears to have drawn on a book dataset that included pirated books from LibGen. And GPT-3’s dataset was disclosed in the article announcing it.

OpenAI hasn’t disclosed data for the later versions of the GPT series, but we can assume that they include this dataset and augment it with additional data.

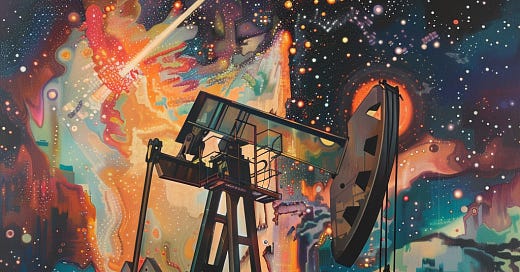

Once the entire web is scraped and YouTube is milked for human speech, additional data starts to look pretty difficult to come by—especially since scrapeable text and even videos or audio shared on the web are increasingly likely to be generated by AI. Mathematician Clive Humby called data “the new oil” because it was valuable, but also needed to be refined. We can extend this metaphor to note that as oil/data is increasingly scarce, people will go to greater lengths to obtain what’s left. Where can reliably human-produced language be found now?

Which brings me to the drama that made the University of Michigan comms team sweat last week: Was UM selling student data for the purpose of training LLMs??

On Feb 15, tech industry veteran Susan Zhang tweeted about a dataset someone was trying to sell her: “University of Michigan LLM Training Data.” This dataset apparently contains 85h of recorded academic speech and 829 academic papers for the low, low price of $25,000. She shared the email she received as an image in the tweet, along with a withering suggestion that UM was selling out.

A number of her replies note that this is a pretty small dataset for the money, although it did sound like quality data. Others suggested that it sounded like a scam, and she should verify her sources before boosting (in other words, there’s no way a university would do this!). Some said this seller might be repackaging data that’s already public.

Was UM selling student data for the purpose of training LLMs??

Joseph Cox did a story on it for 404 Media. Zhang had redacted the seller, but 404 Media figured out it was Catalyst Research Alliance that was selling the data. 404 requested a free sample from their website. Cox describes the audio sample and the transcript:

“So, um, does everybody have a handout, for today?” a speaker says at the start of the audio. The accompanying transcript indicates this audio was of a “Graduate Cellular Biotechnology Lecture” in February 1, 1999 with a group of more than two dozen students.

The transcript also includes a note about the need to purchase a license for any commercial use of the data, Cox writes.

UM comms issued a statement about Zhang’s post, clarifying that they were not selling the dataset, that the 3rd party vendor’s selling had been halted, and that the dataset was already publicly available to researchers. Moreover, the data was collected with consent:

The content referenced in the post includes papers and speech recordings that had been voluntarily contributed by student volunteers participating, under signed consent, in two research studies, one in 1997 – 2000, and a second in 2006 – 2007. None of the papers or recordings included identifying information, such as names or other personal data.

Of course, a little research on a graduate faculty member at UM teaching a “Cellular Biotechnology” seminar in Spring 1999 would yield the faculty member’s name. A little more research—especially with the help of some AI voice recognition—could yield the students’ names. That technology would not have been as available in 1999, so the issue of consent here—which may have been clear at the time—is now pretty murky. As someone who was in college in 1999, I can say with confidence that no one consenting this recording would have considered the potential use of training LLMs.

It appears that Catalyst Research Alliance had been trying to resell data that was already public, as Emilie A pointed out in Zhang’s replies. The two datasets the company was drawing from are almost certainly MICASE and MICUSP, described on the UM LSA English Language Institute website. Catalyst boasts 829 academic papers, the exact amount in MICUSP, Michigan Corpus of Upper-Level Student Papers. MICASE, Michigan Corpus of Academic Spoken English, doesn’t specify how many hours of recordings, but my own browsing of the audio suggests that Catalyst’s promised 65 hours looks about right.

How was consent obtained for these datasets? The first three minutes of this recording documents the consent process, noting that it was part of MICASE, approved by IRB, the researchers had obtained the instructor’s permission, and participants had the right to decline recording or withdraw after the recording. The stated purpose of the audio collection was to understand varieties of natural speech for research and teaching purposes. This is all by-the-book consent language for university research--totally above board. John Swales, renowned linguist of academic discourse and now Professor Emeritus at UM, is mentioned as leading the study’s collection. While there are a few questions after the researcher reads the consent doc, consent is clearly given by the students in the course.

So, I want to be clear: I don’t think UM researchers did anything wrong here. Data was obtained for the purpose of research through what appears to be a standard IRB review and consent process. The data was made available through the UM’s ELI website in corpus form as a generous act of datasharing, common among researchers and often the most ethical choice for universities like UM because it allows researchers at other, underresourced institutions to also contribute to research.

I don’t think UM researchers did anything wrong here.

However, the consent process in that recording doesn’t specify the potential availability of data on the web. That’s unlikely to have been assumed in 1999, or even in 2007, the latest date in the dataset. So, do we have a problem here, or what?

Maybe. First, I think this incident should be a warning to universities about data sharing. Universities have access to a lot of human-produced writing, especially in the form of high-quality academic prose and cross-cultural conversation. Universities have historical datasets such as MICASE and MICUSP that contain reliably human data. This data will be increasingly tempting for tech companies. Even now, with academic integrity policies in place, student work is still more reliably human than the raw web or Mechanical Turk, or other potential data sources. We need to watch access to our students’ work, even for stated research goals and program improvement, to make sure that it doesn’t travel outside of those uses.

We also need to watch our archives. A faculty colleague of mine who works in archives containing non-English materials recently mentioned they’d been contacted directly by a tech company for access to these archives (they declined). I got the sense that these tech companies knew better than to contact the librarians and archivists—informational professionals may be uniquely attuned to these data issues. But individual faculty? Faculty who might consent to open up their archives or their classes for data collection to train LLMs without understanding the implications? Not everyone is paying close attention to the data privacy issues at stake here. And baked into university research ethics is an orientation towards sharing. We generally don’t get paid or pay for our data, and we can do others a solid by sharing it freely.

But I think we should be wary of such free sharing now. Universities need to pay attention to where and to whom our data is going, and consider future uses of data as well. This is going to be an increasing challenge. AI companies are hungry for certified human data, and we have access to such data. I’ve written before about the dangers of open data and open LLMs with the 2022 case of GPT-4Chan. Perhaps these incidents should serve as lessons that datasets—at least those representing natural language—should now, sadly, be closed by default.

In combination with that Verge piece about the death of robots.txt, this story suggests the ole' tragedy of the commons is reaching its middle.

Yikes. That's spooky stuff. Thank you for writing about it!